How to use the Oracle database basically?

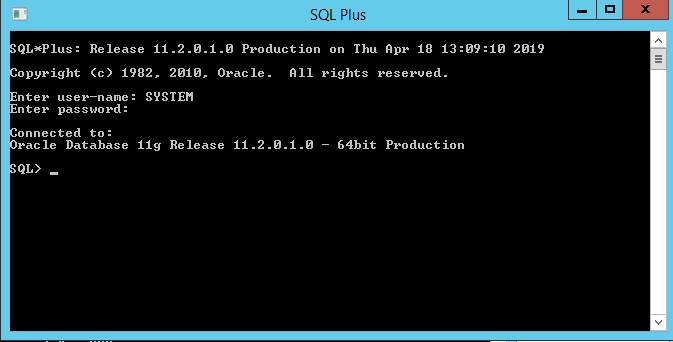

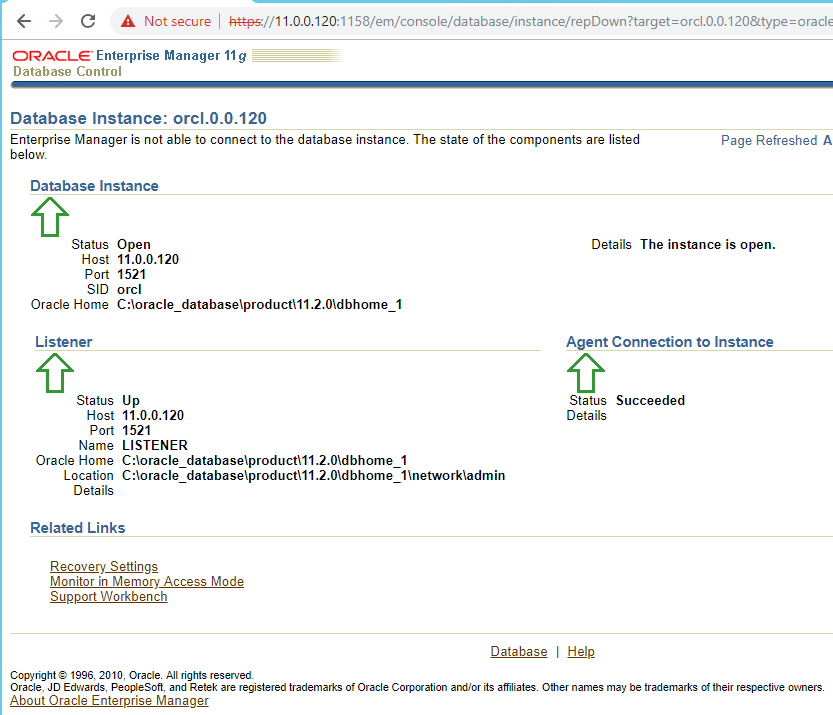

Recently, I need to study about the Oracle Database. In fact, I am not DBA. In this post, I will write the database queries to use later. I will use the Oracle SQL developer for this test.

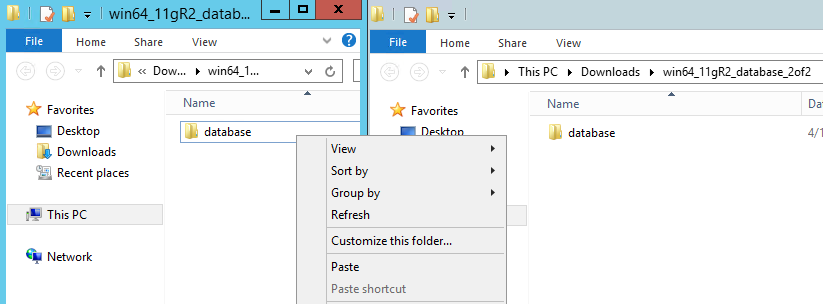

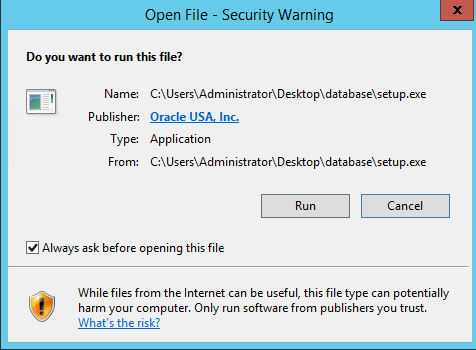

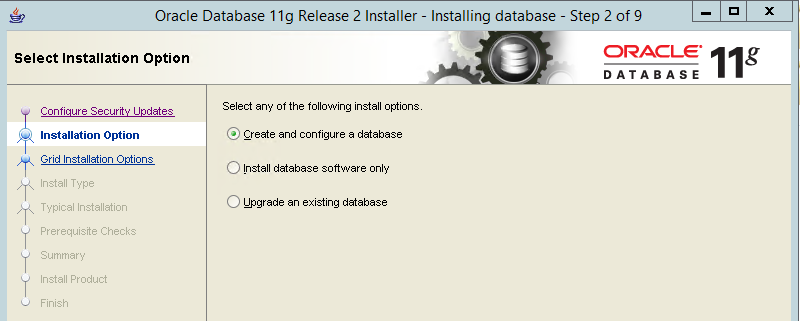

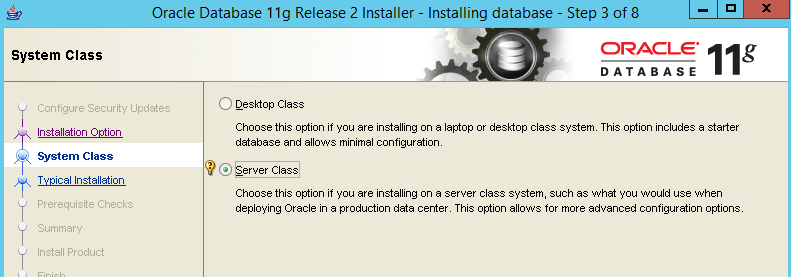

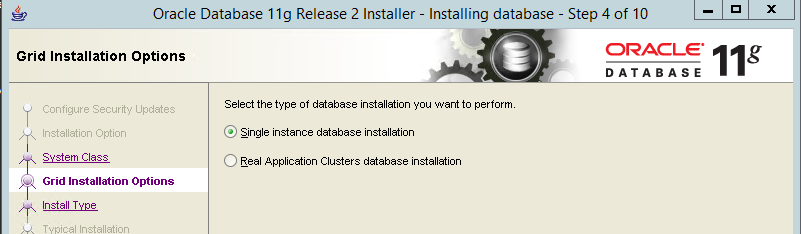

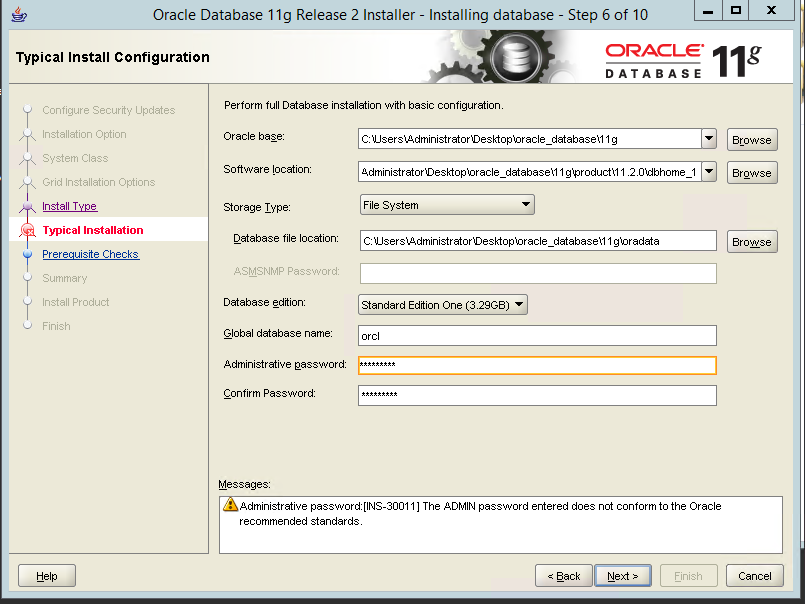

1. Download and Connect database

I followed this instruction, which introduce how to connect to database. I need to download Oracle SQL developer from here. After download, I can connect database with connect button on the left of top.

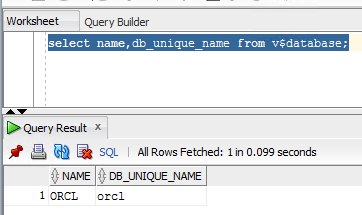

2. Check the Database Properties.

select * from database_properties; |

I can see configuration or properties from query like below. For example, I can see the "Default Temp Tablespace" which the user information is stored in.

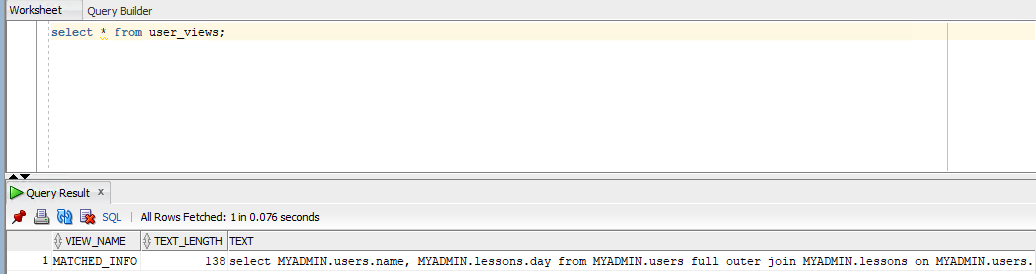

3. Create (Drop) User

This is important part to understand about Oracle Database. In Oracle, there is "schema" term. I think this is also user. All of objects such as table are connected to this schema. For example, "schema.table_name" is used as the table name when I create table. This instruction show how to create user.

I can get the all of users information in this database like below.

Now, I will create new user which is identified by the password.

create user virginiauser identified by password1; |

I can see the new user created with "select * from dba_users;".

If you want to remove or revoke user, follows the command below.

4. Grant Privileges and role.

However, this user does not have any role and privileges. In this instruction, there are comments for roles.

In my case, I am AWS Oracle RDS database. Look at the role assigned.

select * from dba_role_privs; |

There are so many roles assigned. There is no any role in user created before. However, there are so many role assigned for 'RDSADMIN' and master account.

| RDSADMIN |

CRENETADMIN |

| XDBADMIN |

XDBADMIN |

| EXECUTE_CATALOG_ROLE |

EXECUTE_CATALOG_ROLE |

| CTXAPP |

CTXAPP |

| DATAPUMP_IMP_FULL_DATABASE |

DATAPUMP_EXP_FULL_DATABASE |

| OPTIMIZER_PROCESSING_RATE |

OPTIMIZER_PROCESSING_RATE |

| CAPTURE_ADMIN |

CAPTURE_ADMIN |

| IMP_FULL_DATABASE |

IMP_FULL_DATABASE |

| AQ_ADMINISTRATOR_ROLE |

AQ_ADMINISTRATOR_ROLE |

| EM_EXPRESS_BASIC |

EM_EXPRESS_BASIC |

| EM_EXPRESS_ALL |

EM_EXPRESS_ALL |

| DELETE_CATALOG_ROLE |

DELETE_CATALOG_ROLE |

| SODA_APP |

SODA_APP |

| RECOVERY_CATALOG_USER |

RECOVERY_CATALOG_USER |

| CONNECT |

CONNECT |

| OEM_ADVISOR |

OEM_ADVISOR |

| OEM_MONITOR |

OEM_MONITOR |

| SELECT_CATALOG_ROLE |

SELECT_CATALOG_ROLE |

| HS_ADMIN_SELECT_ROLE |

HS_ADMIN_SELECT_ROLE |

| DBA |

DBA |

| RESOURCE |

RESOURCE |

| |

RDS_MASTER_ROLE |

| DATAPUMP_EXP_FULL_DATABASE |

DATAPUMP_IMP_FULL_DATABASE |

| XDB_SET_INVOKER |

XDB_SET_INVOKER |

| GATHER_SYSTEM_STATISTICS |

GATHER_SYSTEM_STATISTICS |

| SCHEDULER_ADMIN |

SCHEDULER_ADMIN |

| RECOVERY_CATALOG_OWNER |

RECOVERY_CATALOG_OWNER |

| HS_ADMIN_EXECUTE_ROLE |

HS_ADMIN_EXECUTE_ROLE |

| AQ_USER_ROLE |

AQ_USER_ROLE |

| EXP_FULL_DATABASE |

EXP_FULL_DATABASE |

Now I will give 3 role for user which I created like below.

grant connect, resource, dba to virginiauser; |

After this I can check the role status.

So far, I studied how to grant the privileges. However, I need to revoke these sometimes.

revoke connect, resource, dba from virginiauser; |

After granting roles to user, I need to give some privileges to this user. In oracle, there are two types of privileges, system and table. At first, I will grant some privileges for the system.

| RDSADMIN |

CRENETADMIN |

| INHERIT ANY

PRIVILEGES |

|

| GRANT ANY

OBJECT PRIVILEGE |

GRANT ANY OBJECT

PRIVILEGE |

| DROP ANY

DIRECTORY |

DROP ANY DIRECTORY |

| UNLIMITED

TABLESPACE |

UNLIMITED TABLESPACE |

| EXEMPT

REDACTION POLICY |

EXEMPT REDACTION

POLICY |

| CHANGE

NOTIFICATION |

CHANGE NOTIFICATION |

| FLASHBACK ANY

TABLE |

FLASHBACK ANY TABLE |

| ALTER SYSTEM |

|

| ALTER PUBLIC

DATABASE LINK |

ALTER PUBLIC DATABASE

LINK |

| EXEMPT ACCESS

POLICY |

EXEMPT ACCESS POLICY |

| ALTER DATABASE |

|

| SELECT ANY

TABLE |

SELECT ANY TABLE |

| RESTRICTED

SESSION |

RESTRICTED SESSION |

| ALTER DATABASE

LINK |

ALTER DATABASE LINK |

| CREATE

EXTERNAL JOB |

|

| EXEMPT

IDENTITY POLICY |

EXEMPT IDENTITY

POLICY |

| ADMINISTER

DATABASE TRIGGER |

|

| GRANT ANY ROLE |

|

I can give(remove) some system privileges like below.

grant UNLIMITED TABLESPACE TO myadmin; revoke UNLIMITED TABLESPACE from myadmin; |

After this, I can verify the status with "select * from dba_sys_privs" query.

Now, the table privileges left. In fact, I can not explain about this without the table. I will add more detail later in this post. Just look at the command how to see.

select * from dba_tab_privs where grantee='CRENETADMIN'; |

5. Create(Drop) Table

Before I create the Data table, I need to create table space. In this instruction, what kinds of parameters are required is explained. This command show the table spaces list.

select * from dba_tablespaces; |

There are some necessary factor for this table space. Fist is "Contents". There are three values to category. In the instruction, there are comments like below.

A permanent tablespace contains persistent schema objects. Objects in permanent tablespaces are stored in datafiles.

An undo tablespace is a type of permanent tablespace used by Oracle Database to manage undo data if you are running your database in automatic undo management mode. Oracle strongly recommends that you use automatic undo management mode rather than using rollback segments for undo.

A temporary tablespace contains schema objects only for the duration of a session. Objects in temporary tablespaces are stored in tempfiles. |

From this statements above, "Undo tablespace" is not friendly rather than others. Please, read these instructions, https://oracle-base.com/articles/9i/automatic-undo-management and https://docs.oracle.com/cd/B28359_01/server.111/b28310/undo002.htm#ADMIN11462. For this "Undo tablespace", "automatic undo management" is required. The recently version is set as defaults. Also, I tried to change this configuration in AWS RDS. Howerer, I can not alter this.

Second is the datafile conecpt such as "bigfile" or "smallfile". In this instruction,

A bigfile tablespace contains only one datafile or tempfile, which can contain up to approximately 4 billion (232) blocks. The maximum size of the single datafile or tempfile is 128 terabytes (TB) for a tablespace with 32K blocks and 32TB for a tablespace with 8K blocks.

A smallfile tablespace is a traditional Oracle tablespace, which can contain 1022 datafiles or tempfiles, each of which can contain up to approximately 4 million (222) blocks. |

From this statements, I can not understand "smallfile tablespace can contain multiple datafiles". Please look at this link,

create tablespace homeworkts datafile 'D:\oradata\orcl\df1.dbf' size 4m, 'D:\oradata\orcl\df2.dbf' size 4m, 'D:\oradata\orcl\df3.dbf' size 4m; |

However, this command does not work in AWS RDS. Because RDS does not give any permission for this like below. I can not create the datafile.

There are so many options, however I can not handle all of things. I think I can create tablespace with these information. I hope this instruction will be helpful.

# Permament-Bigfile tablespace create bigfile tablespace myspace;

# Permament-Bigfile tablespace create bigfile temporary tablespace mytemp; |

After this command, I can see the "select * from dba_tablespaces;" and "select * from dba_data_files;"

select tablespace_name,contents,bigfile from dba_tablespaces; |

select file_name,tablespace_name,autoextensible from dba_data_files; |

Now, I created table spaces. I can start to create table. Please look at this, there are sample example to create table.

| create table user.info ( name varchar2(15), id number(10) ) tablespace myspace; |

This command create "error" like below. This error is occurred by table name.

In oracle, table name must be "schema (=user)"+"table name". This is the reason why the schema (=user) is import. Because of this, I have to revise the command below. "MYADMIN" is created user before.

create table MYADMIN.user_info ( name varchar2(15), id number(10) );

|

After this command I can see the table information with "select * from dba_tables;"

select * from dba_tables; select * from dba_tables where owner='MYADMIN'; |

There are 2 things special. I insert "MYADMIN.user_info" as the table name. Howerver, oracle re-arrange this table name with "owner" and "table_name". Also, "USERS" tablespace is allocated for this table, which is default permanent tablespace. However I want that this table is located in "MYSAPCE" table space. Thus, it should be revised like below.

create table MYADMIN.user_info ( name varchar2(15), id number(10) ) tablespace myspace; |

However, I can create this. Because this table name has already existed. Thus I need to drop this table with this command.

drop table MYADMIN.user_info; |

Now, this is what I want.

6. Insert, Update, Delete Data

Before start this part, I need to covert account with "MYADMIN", which is used as the "schema name".

select * from user_tables; |

"user_tables" show the information which belonged to logon account. If I can see table only I create, it is OK.

However, there is other way to find out logon current user. In this instruction, "select user from dual;" is command.

I will follow this instruction. At first, I will try to insert some data into "user_info" table;

insert into user_info (id, name) values (101,'fox'); insert into MYADMIN.user_info (id, name) values (102,'wolf'); |

Please note that I can use both of "schema+table name" and "table name", because the current user is same as the schema name. After this command, look at the table information like below.

select * from MYADMIN.user_info; |

Now, I will update the data. Please note I use "schema+table name" as the table name. It does not affect the result.

update MYADMIN.user_info set ID=103 where name='wolf'; |

Finally, Delete is left. This is not difficult to run.

delete from user_info where id=103; |

7. Troubleshooting. (table privileges with other schema)

I am happy to learn how to insert, update and delete data. However, I need to think about my privileges. So far, I did not give any permission about table. The reason why this can be done is that this user is the "schema user". It look like admin user for this table. If I create another user (=schema), I wonder if this user can insert, update and delete with this table.

# create new (another) user create user myuser indentified by myuser; |

This user does not any role and privileges at the beginning.

I can not logon into the Database. I add the role with "connect"

Now, I can login. However, I can not select, insert, update and delete over table. I need to give some privileges.

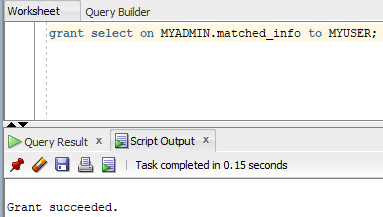

With administrator account, I will give this user some permissions. Please, look at the this instruction.

grant select, insert, update, delete on MYADMIN.user_info to MYUSER; |

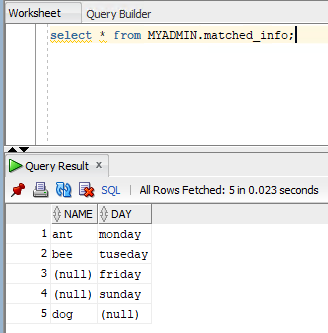

Now, I can select, insert, update and delete.

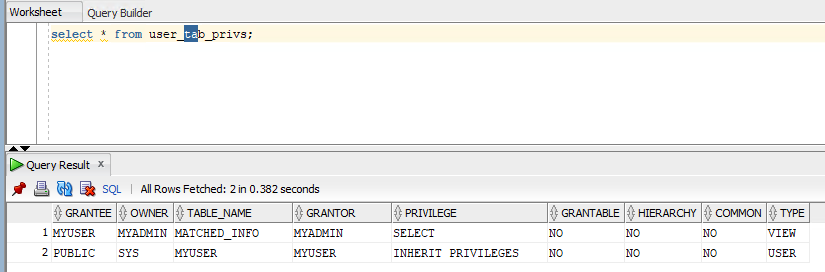

If I want to know what kinds of table privileges does this user has, use this command

select * from dba_tab_privs where grantee='MYUSER'; |

This is basic oracle database concept. I hope it will be helpful. If I have enough time to learn, I will handle more. However, I am not DBA engineer. I hope I can keep touch about this part again.

Reference

[ 1 ] https://docs.aws.amazon.com/ko_kr/AmazonRDS/latest/UserGuide/USER_ConnectToOracleInstance.html

[ 2 ] https://www.oracle.com/technetwork/developer-tools/sql-developer/downloads/index.html

[ 3 ] https://docs.oracle.com/cd/B28359_01/server.111/b28286/statements_8003.htm#SQLRF01503

[ 4 ] https://chartio.com/resources/tutorials/how-to-create-a-user-and-grant-permissions-in-oracle/

[ 5 ] https://docs.oracle.com/cd/B19306_01/server.102/b14200/statements_7003.htm

[ 6 ] https://stackoverflow.com/questions/8496152/how-to-create-a-tablespace-with-multiple-datafiles

[ 7 ] https://docs.oracle.com/en/database/oracle/oracle-database/12.2/sqlrf/CREATE-TABLESPACE.html#GUID-51F07BF5-EFAF-4910-9040-C473B86A8BF9

[ 8 ] https://www.java2s.com/Code/Oracle/User-Previliege/Getcurrentusername.htm

[ 9 ] https://www.oracle-dba-online.com/sql/insert_update_delete_merge.htm